Ascendo Pte Ltd

- Address: 85, Playfair Road #06-02 Tong Yuan Industrial Building Singapore 368000

- Tel: +65 6741 1218

- Email: [email protected]

© 2026 Ascendo Pte Ltd. All Rights Reserved. Website By Creative eWorld Pte Ltd.

Video Analytics process video in real-time and transform it into intelligent data. They automatically generate descriptions of what is happening in the video (metadata) and are used to detect and track objects which also could be categorized as persons, vehicle and other objects in the video stream. This information forms the basis on which to perform actions, e.g. to decide if security staff should ne notified or if a higher quality recording stream should be used. Video Analytics turn simple IP video surveillance into business intelligence.

Video Analytics is a much more practical solution to review hours of surveillance video to identify incidents that are pertinent to what you are looking for. Utilizing video analytics will increase the efficiency of your security monitoring process and decrease the work load on security and management staff.

When used in conjunction with a Video Management System (VMS) you can act upon the metadata generated by Video Analytics. Surveillance automation allows you to create rules to alert security personnel for specific events of interest.

To reduce loss, theft and vandalism

To reduce loss, theft and vandalism

Creating staff and visitor safety

Creating staff and visitor safety

Very high scalability

Very high scalability

Remote Monitoring

Remote Monitoring

Improve accessibility

Improve accessibility

No recurring main license yearly

No recurring main license yearly

Reduce installation cost

Reduce installation cost

Improve productivity

Improve productivity

Reduce manpower crunch

Reduce manpower crunch

Improves easy of installation and implementation

Improves easy of installation and implementation

Integrate with Video Management Software System

Integrate with Video Management Software System

No recurring main license yearly

No recurring main license yearly

The Fall Detection module is designed to detect fall of people

Note: the module does not detect the falling process. It detects a laying down person as a fallen person.

It is possible to enable the frame highlighting of the fall scene, both in live view and archive playback. When falls are detected, Fall detected events are generated and recorded in the Event log.

Note: The module operates on a neural network using a video card (GPU). It is recommended to install the Eocortex Neural Network Special package.

Compatibility with other modules |

||||

| Requires Eocortex motion detector | Neutral Network | Compatible with Modules | Incompatible with modules

|

|

| Standard | Special | |||

| √ | √ | √ |

|

|

|

√ |

supported and required for the module to work |

|

+ |

supported and provides additional features of the module |

|

– |

not supported or not required for the module to work |

|

⚠ |

not recommended for use with the current module |

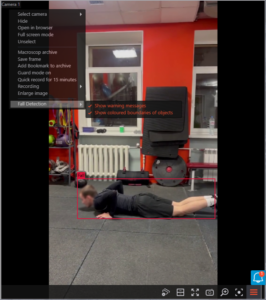

It is possible to enable the frame highlighting of the fall scene, both in live view and archive playback. When falls are detected, Fall detected events are generated and recorded in the Event log.

⚠Warning: This module will only work on the cameras on which it has been enabled by the administrator of video surveillance system.

To enable the display of frames around people who have fallen, select the Fall Detection subitem Show colored boundaries of objects in the context menu of the cell.

⚠Warning: It is required to install the neural package before using the module

To use the module, enable and set up the software motion detector, then enable and set up the module itself.

Launch the Eocortex Configuration go to the  Cameras tab, select a camera in the list located on the left side of the page, and set up the motion detector on the Motion detector tab on the right side of the page.

Cameras tab, select a camera in the list located on the left side of the page, and set up the motion detector on the Motion detector tab on the right side of the page.

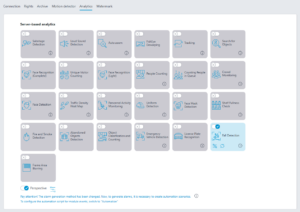

Then switch to the Analytics tab enable the module using the  toggle.

toggle.

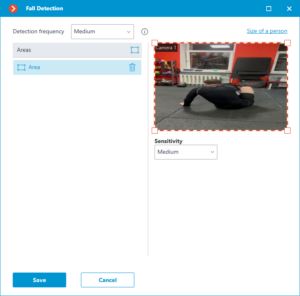

Clicking the  button opens the module setup window.

button opens the module setup window.

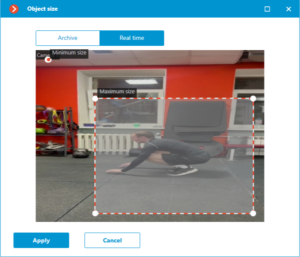

When setting up the module, select the detection frequency, the minimum and maximum people dimensions to be detected and set the areas in which falls will monitored.

By increasing the detection frequency, falls will be detected faster, including those in which the person immediately stands up. But this will increase the load on the CPU and GPU.

When setting up detection areas, it is important to consider that people whose center of the image is outside the specified areas will not be detected.

Sensitivity is selected for each area:

Setting the size of a person is the same for all areas. Therefore, falls will only be detected in all areas for people who fit within the specified size range.

⚠Warning: The module will start working only when the settings are applied.

⚠Warning: It is required to install the neural network package before using the module.

The following equipment is required to use this neural network-based module:

If the package will be installed on a virtual machine, it may additionally be required to:

⚠Warning:

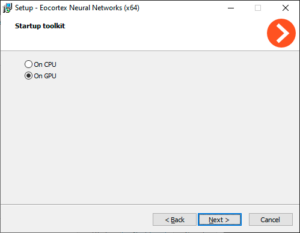

Note: The module operates on a neural network using a video card (GPU). It is a recommended to install the Eocortex Neural Networks Special package.

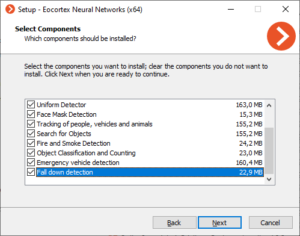

While installing the package, select the relevant component.

It is recommended to use graphics card (GPU) to run the module.

© 2026 Ascendo Pte Ltd. All Rights Reserved. Website By Creative eWorld Pte Ltd.

© 2026 Ascendo Pte Ltd. All Rights Reserved. Website By Creative eWorld Pte Ltd.